As a Data Scientist, our task is to extract insights from a given data. Sometimes we don't have access those data or it's not enough to give us a conclusive findings. One of the ways to find more information is to search on the internet but browsing a lot of webpages can be a hassle and a sometimes a waste of time.

Web Scraping

Scraping is a process of extrating information automatically from various sources. It can be from a database, a huge spreadsheet, a text file and so on. Web scraping is when you scrape information from diferrent web pages of a website.

Is Web Scraping Illegal?

That depends on the website's terms of service and privacy policy. You must first research on your target website before trying to scrape their data. Most websites dont's allow scraping their websites because it brings additional burden on their server especially when you scrape their pages on a rapid rate. Be carefull on doing so because their firewall may block you from accessing their website.

* Read the website terms of service and privacy policy first before scraping the website.

Scraping Tutorial

Prerequisite:

- Python intalled

- A simple text editor/Jupyter Notebook

- Basic knowledge on HTML

Problem Statement

As a junior data scientist, your boss asks you to determine the average rate of a Photographer in the Philippines. Your problem is you don't have accesible data in-house to solve that problem. You search for "Photographer Rates in the Philippines" on google and you saw a website called kwyzer.com. You're in luck because Kwyzer Philippines is one of the leading event service provider markerplace in the Philippines. You can now proceed on inspecting Kwyzer's website HTML structure.

HTTP Methods Common in Web Scraping

GET and POST methods are use to transfer data from SERVER (website) to CLIENT (your broswer).

GET method is the most common and it is use to query information from a website and display it as a web page.

POST method is usually use to create or update a record (can be a blog post, a listing, a product, etc).

When accessing a web page on your broswer using a URL, you are using a GET method. When submitting a LOGIN form or a REGISTRATION form, it is usually a POST method (Submitting a login form using GET method is possible but not secured).

Python Code Example

import requests

from bs4 import BeautifulSoup

import pandas as pd

url = 'https://kwyzer.com/service/hire-photographers-videographers-in-the-philippines'

response = requests.get(url)

data = response.text

soup = BeautifulSoup(data)

serviceProviderCards = soup.find_all('div', attrs={'class' : 'service-provider-card'})

photographers = []

for serviceProviderCard in serviceProviderCards:

servicePrivderName = serviceProviderCard.find('div', attrs={'class': 'service-type-name'})

rate = serviceProviderCard.find('div', attrs={'class' : ['extra content']}) \

.find('div', attrs={'class':'mt-5'}) \

.find('strong') \

.text

photographers.append({'name': servicePrivderName.text, 'rate': rate})

df = pd.DataFrame(photographers)

df.to_csv('photographers.csv', index=False)

You can run this script on your termnial by typing

python scrape.py

Where scrape.py is the name of your python file. You can also run this on your jupyter notebook.

Code Explanation

We import the necesarry libraries that we need in scraping Kwyzer Philippines

import requests

from bs4 import BeautifulSoup

import pandas as pd

requests - to submit a POST or GET HTTP request to a website

BeautifulSoup - To interact on the response data from request

pandas (optional) - To easily inspect data and to save it as CSV for external usage

We indicate our TARGET URL and submit a GET request on it and save the RESPONSE to a variable

url = 'https://kwyzer.com/service/hire-photographers-videographers-in-the-philippines'

response = requests.get(url)

data = response.text

Instantiate BeautifulSoup object and pass the response data so we can find the information we want

soup = BeautifulSoup(data)

serviceProviderCards = soup.find_all('div', attrs={'class' : 'service-provider-card'})

soup.find_all('div', attrs={'class' : 'service-provider-card'})

Means we find all the DIV on the HTML page with the class 'service-provider-card'

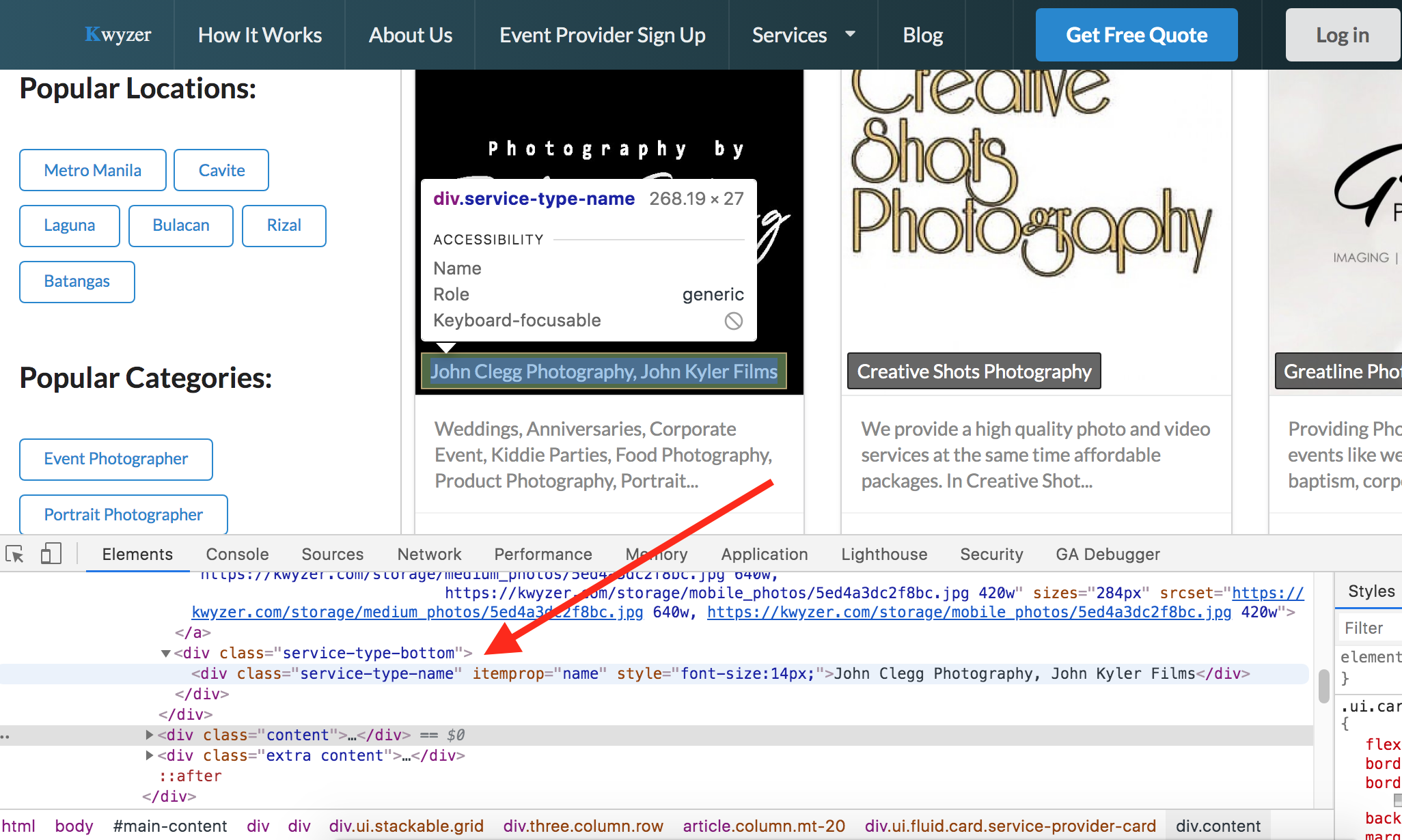

You can check your target information by using Inspect Element on Chrome or Firefox browser. Just Right Click to the desired section and click Inspect Element. Every websites have different HTML structure so you must inspect for the pattern and note for the ID or CLASS attributes of your target elements.

We now iterate to all elements that match our criteria (target class)

photographers = []

for serviceProviderCard in serviceProviderCards:

servicePrivderName = serviceProviderCard.find('div', attrs={'class': 'service-type-name'})

rate = serviceProviderCard.find('div', attrs={'class' : ['extra content']}) \

.find('div', attrs={'class':'mt-5'}) \

.find('strong') \

.text

photographers.append({'name': servicePrivderName.text, 'rate': rate})

servicePrivderName = serviceProviderCard.find('div', attrs={'class': 'service-type-name'})

Inside the card, we found out that the Photographer's name belong to a div with the class 'service-type-name'

rate = serviceProviderCard.find('div', attrs={'class' : ['extra content']}) .find('div', attrs={'class':'mt-5'}) .find('strong') .text

Getting the rate is much more complicated but still posible by chaining multiple 'find' method. We know that it is inside a div with a class 'extra content', then a div with a class 'mt-5' , then a strong tag

Here we just create a Data Frame from our list of photographers and save it as CSV

df = pd.DataFrame(photographers)

df.to_csv('photographers.csv', index=False)

Note: a website HTML structure may change after some period. You may need to update your BeautifulSoup selector from time to time.

You can do more things using BeautifulSoup and you can check out their documentation here.

Now that we have more data, we can now proceed to answer or initial problem which is what is the average rate of photographers in the PH. If you think that you need more data, you can use our codes here and update it to match your new target website.

And that's it! You can now scrape any website you want by using Python and BeautifulSoup. Thank you for reading this blog. Have a nice day.